Deep Generative Modeling

TLoRA

[arXiv]

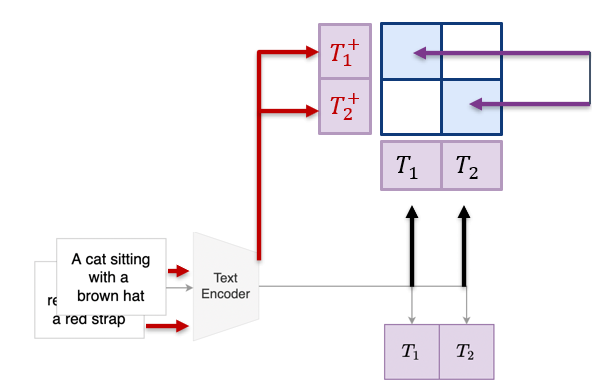

Propose tensor-decomposition-based PEFT method, showing its effectiveness on T-to-I generation tasks

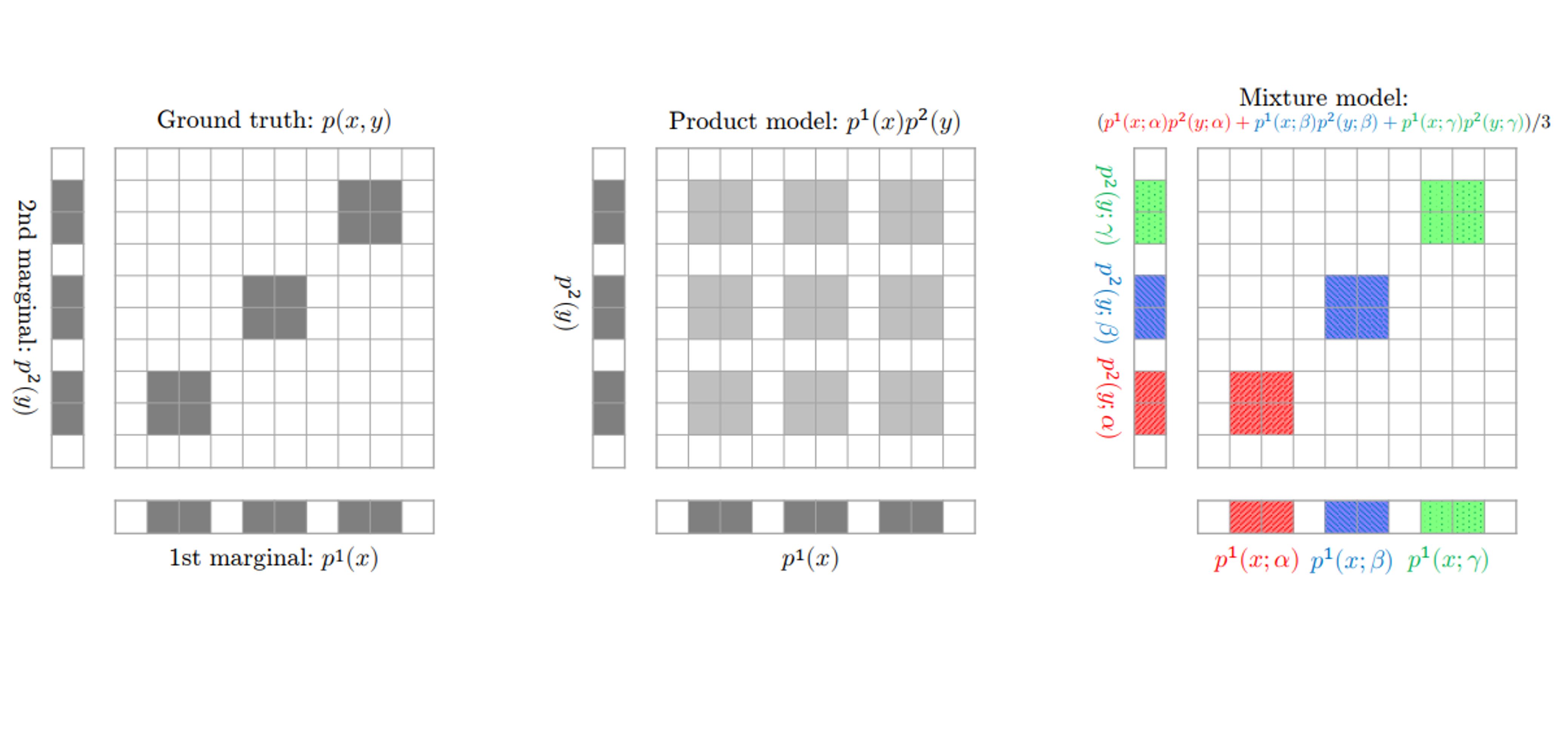

Di4C

[arXiv] [code]

Theoretical analysis of limitation of current discrete diffusion and a method for effectively capturing element-wise dependency

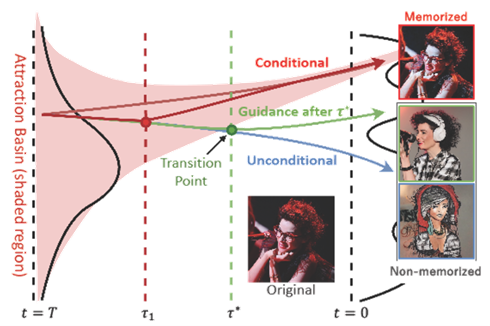

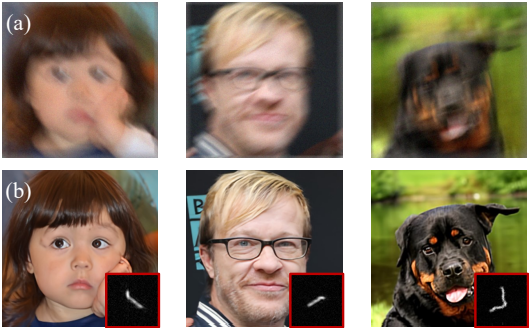

Memorization

[arXiv] [code]

Classifier-Free Guidance inside the Attraction Basin May Cause Memorization

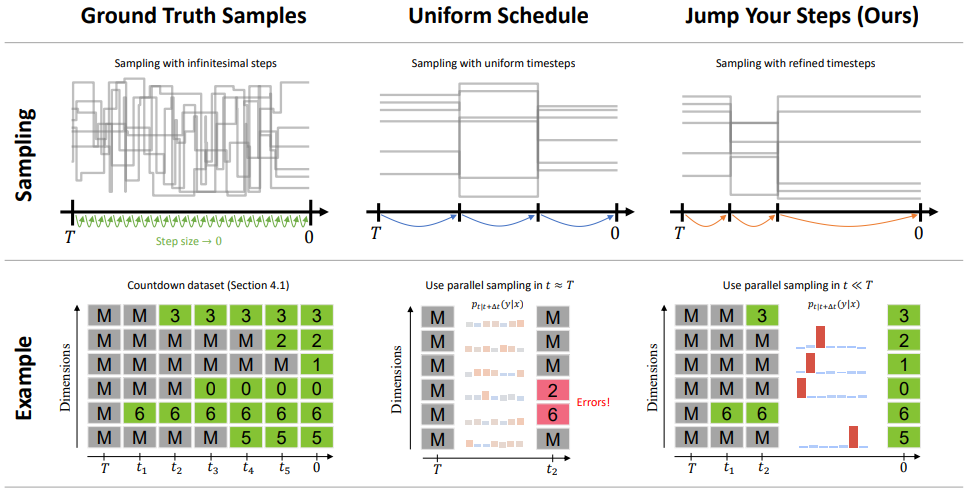

Jump Your Steps

[arXiv]

A general method to find an optimal sampling schedule for inference in discrete diffusion

HERO-DM

[arXiv] [demo]

A method efficiently leverages online human feedback to fine-tune Stable Diffusion for various range of tasks

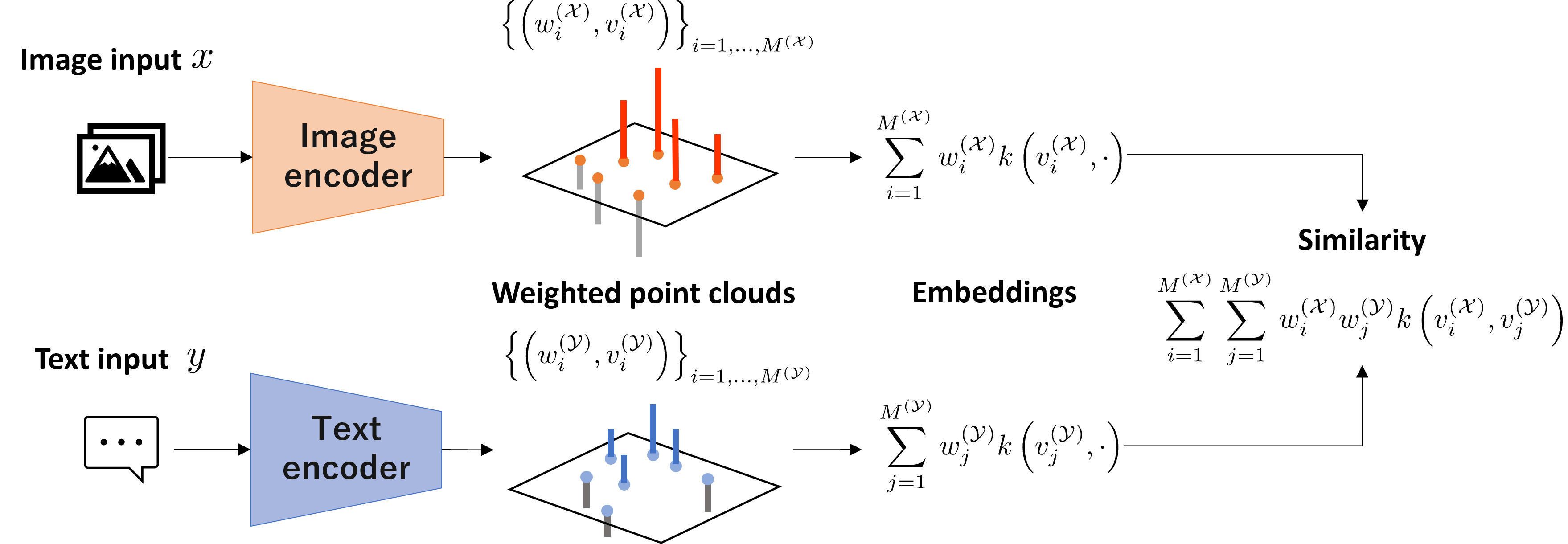

WPSE

[arXiv]

An enhanced multimodal representation using weighted point clouds and its theoretical benefits

PaGoDA

[arXiv]

A 64x64 pre-trained diffusion model is all you need for 1-step high-resolution SOTA generation

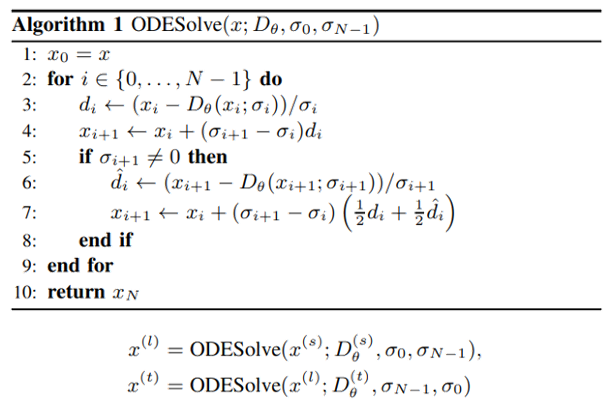

CTM

[arXiv] [demo]

Unified framework enables diverse samplers and 1-step generation SOTAs

Applications:

[SoundGen]

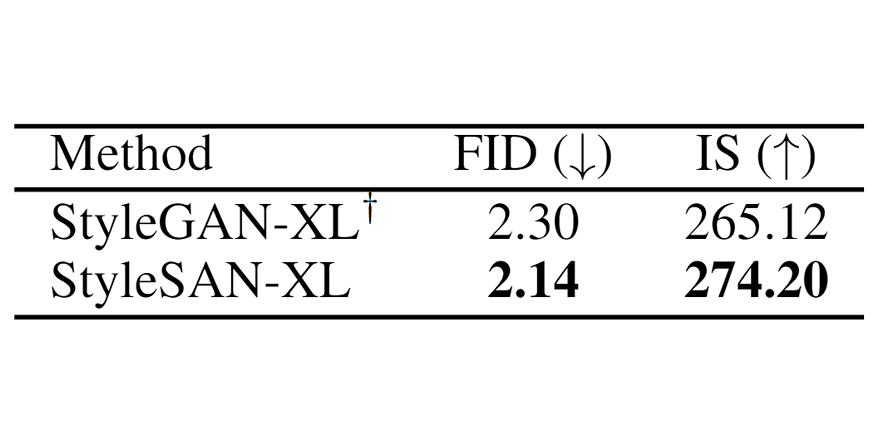

SAN

[arXiv] [code] [demo]

Enhancing GAN with metrizable discriminators

Applications:

[Vocoder]

MPGD

[arXiv] [demo]

Fast, Efficient, Training-Free, and Controllable diffusion-based generation method

GibbsDDRM

[PMLR] [code]

Achieving blind inversion using DDPM

Applications:

[DeReverb]

[SpeechEnhance]

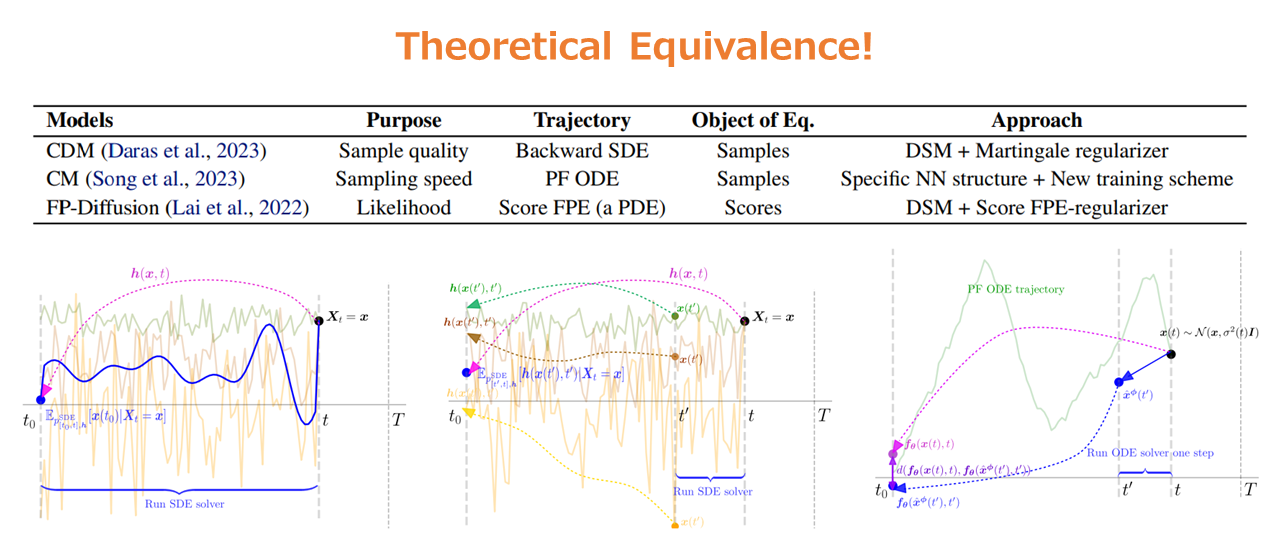

Consistency-type Models

[arXiv]

Theoretically unified framework for "consistency" on diffusion model

Multimodal NLP

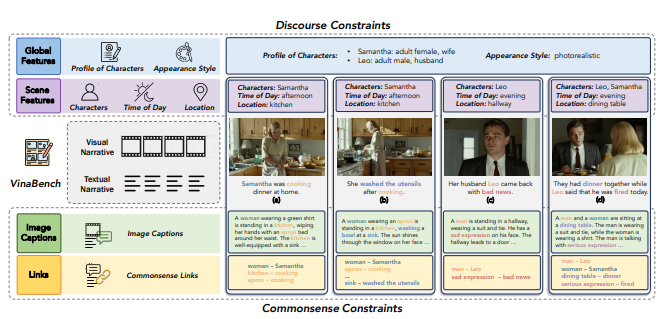

VinaBench

[CVPR] [arXiv] [data]

VinaBench: Benchmark for Faithful and Consistent Visual Narratives

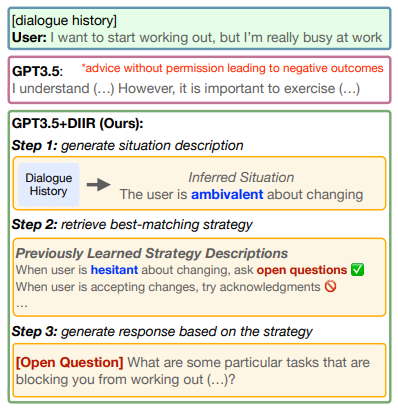

DIIR

[ACL] [arXiv] [code]

Few-shot Dialogue Strategy Learning for Motivational Interviewing via Inductive Reasoning

Music Technologies

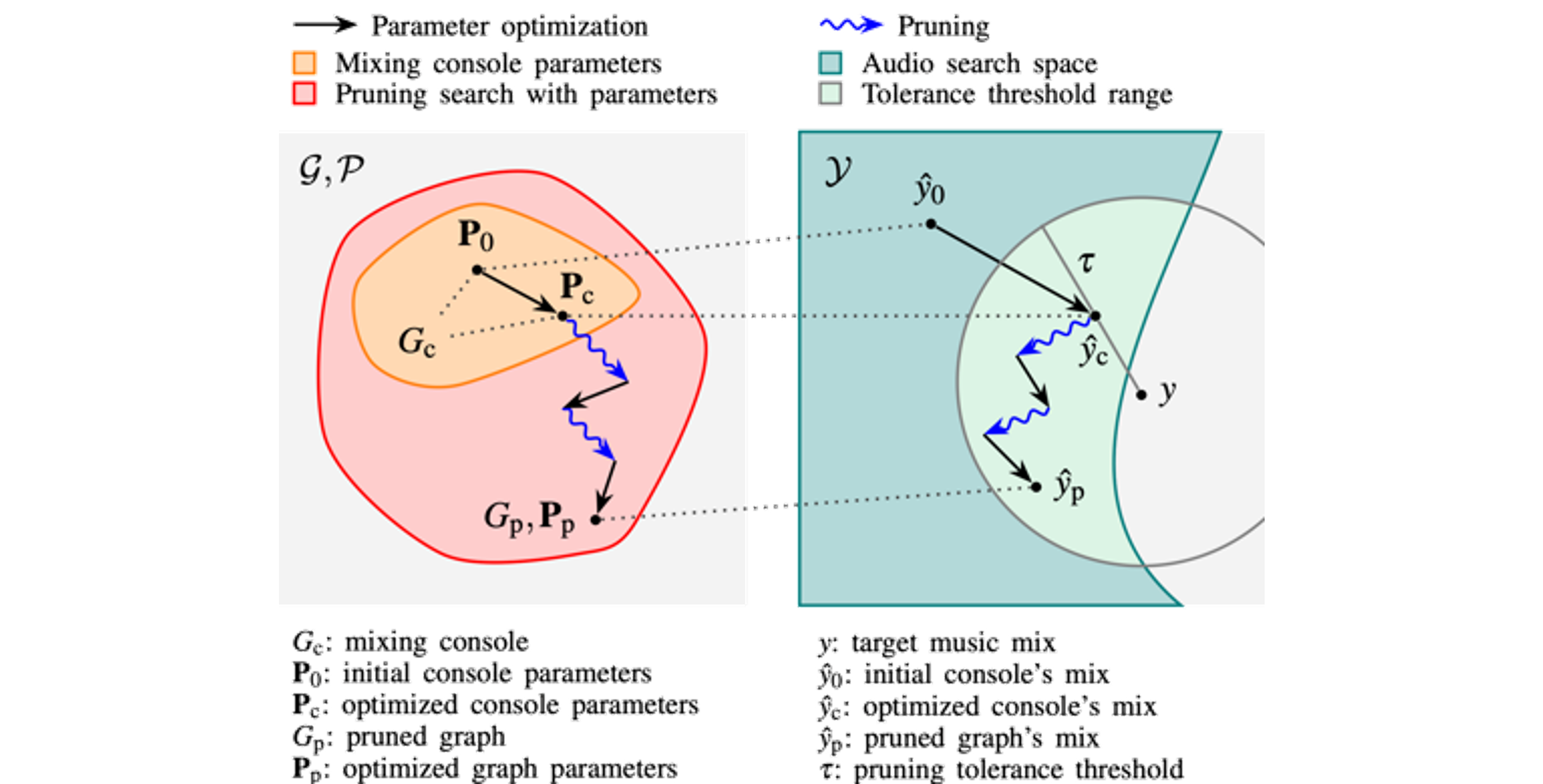

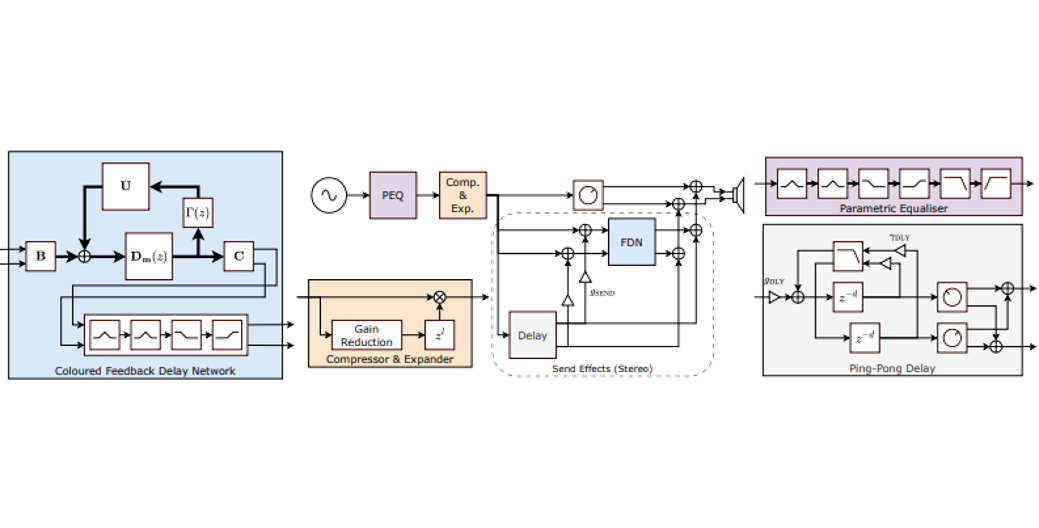

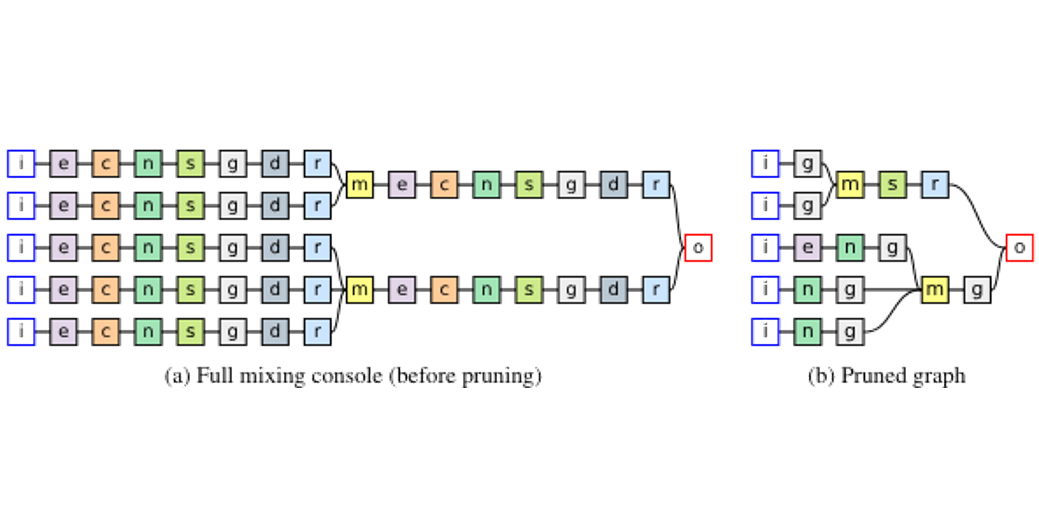

GRAFx (ext.)

[JAES] [code] [demo]

Reverse Engineering of Music Mixing Graphs with Differentiable Processors and Iterative Pruning

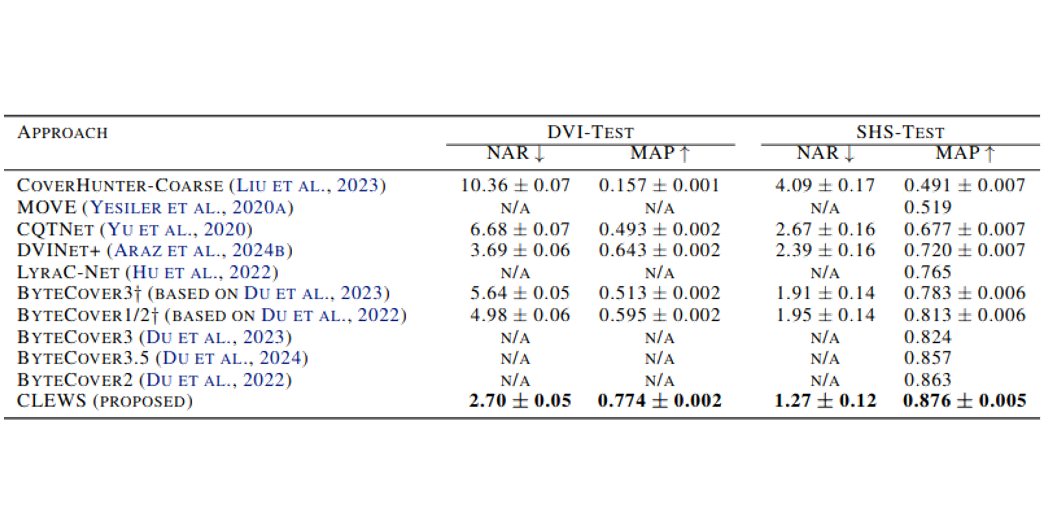

CLEWS

[arXiv]

Supervised contrastive learning from weakly-labeled audio segments for musical version matching

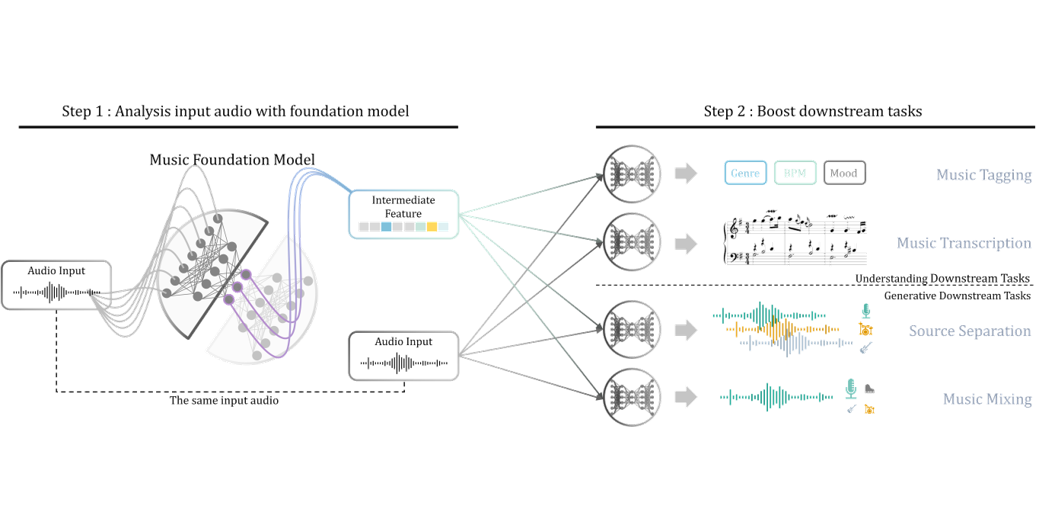

MFM as Generic Booster

[OpenReview] [arXiv]

Music Foundation Model as Generic Booster for Music Downstream Tasks

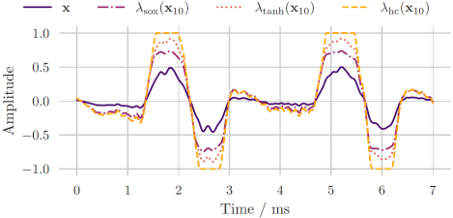

DiffVox

[arXiv]

DiffVox: A Differentiable Model for Capturing and Analysing Professional Effects Distributions

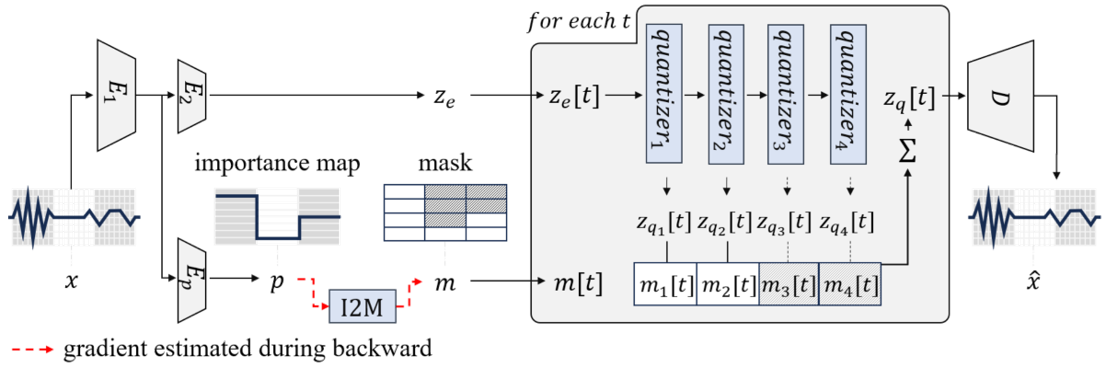

Variable Bitrate RVQ

[arXiv]

VRVQ: Variable Bitrate Residual Vector Quantization for Audio Compression

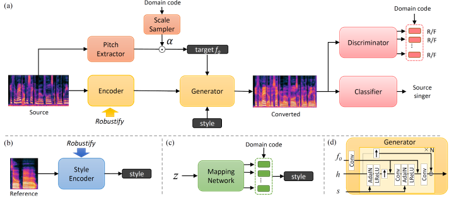

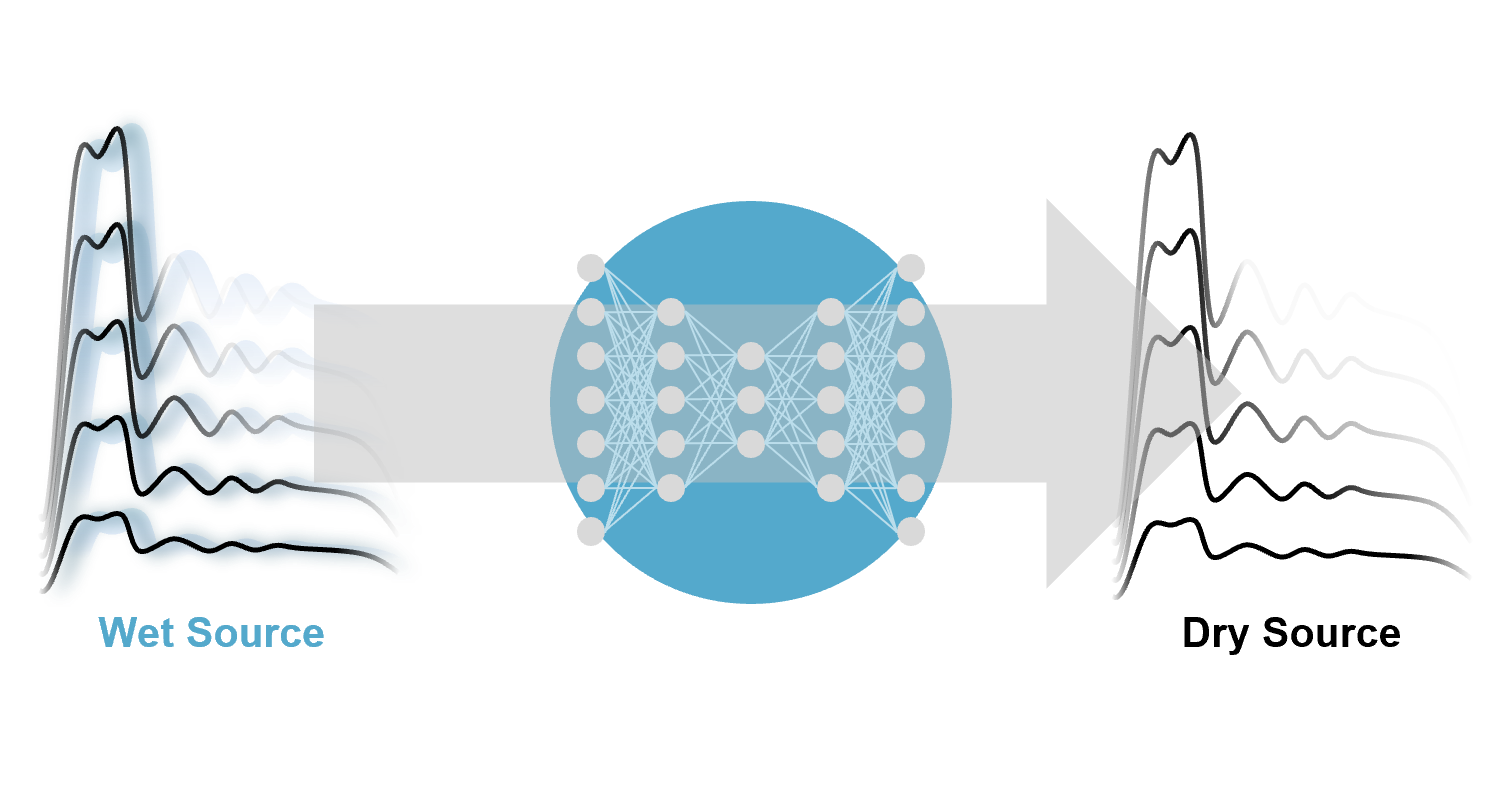

Instr. Timbre Transfer

[arXiv] [code] [demo]

Latent Diffusion Bridges for Unsupervised Musical Audio Timbre Transfer

Mixing Graph Estimation

[arXiv] [code] [demo]

Searching For Music Mixing Graphs: A Pruning Approach

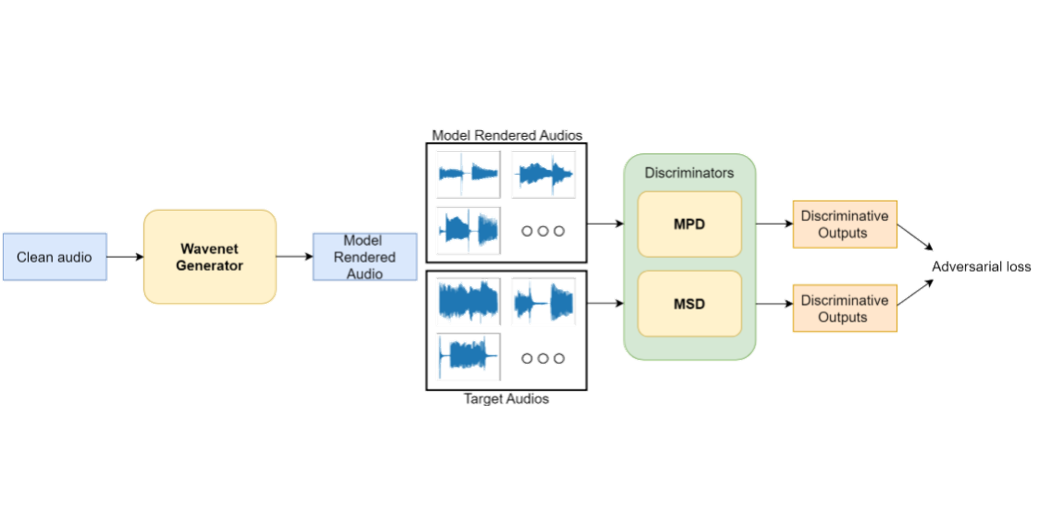

Guitar Amp. Modeling

[arXiv]

Improving Unsupervised Clean-to-Rendered Guitar Tone Transformation Using GANs and Integrated Unaligned Clean Data

Text-to-Music Editing

[arXiv] [code] [demo]

MusicMagus: Zero-Shot Text-to-Music Editing via Diffusion Models

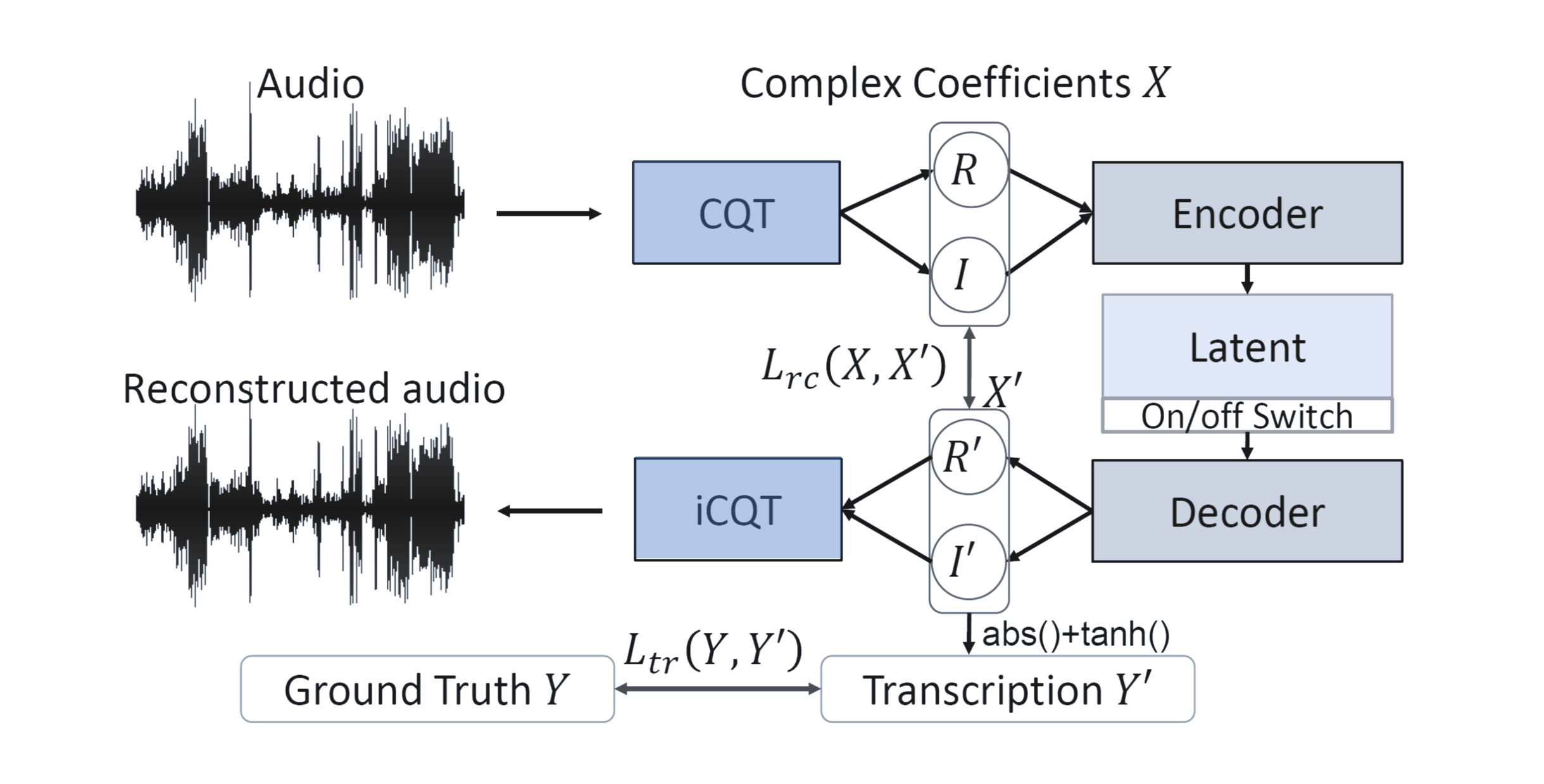

Instr.-Agnostic Trans.

[IEEE] [arXiv]

Timbre-Trap: A Low-Resource Framework for Instrument-Agnostic Music Transcription

Vocal Restoration

[IEEE] [arXiv] [demo]

VRDMG: Vocal Restoration via Diffusion Posterior Sampling with Multiple Guidance

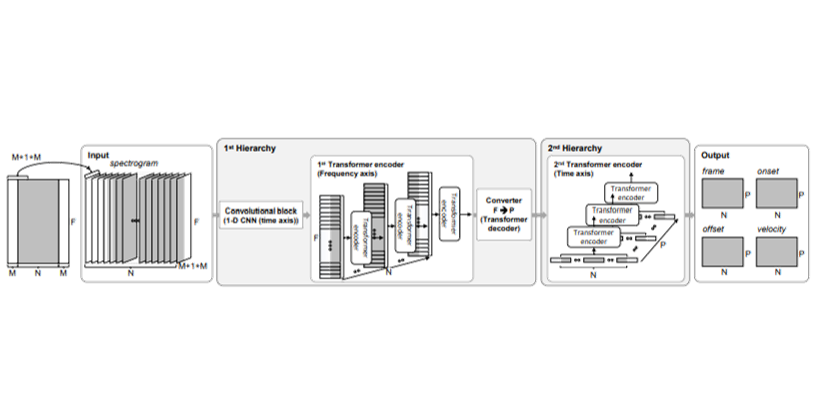

hFT-Transformer

[arXiv] [code]

Automatic Piano Transcription with Hierarchical Frequency-Time Transformer

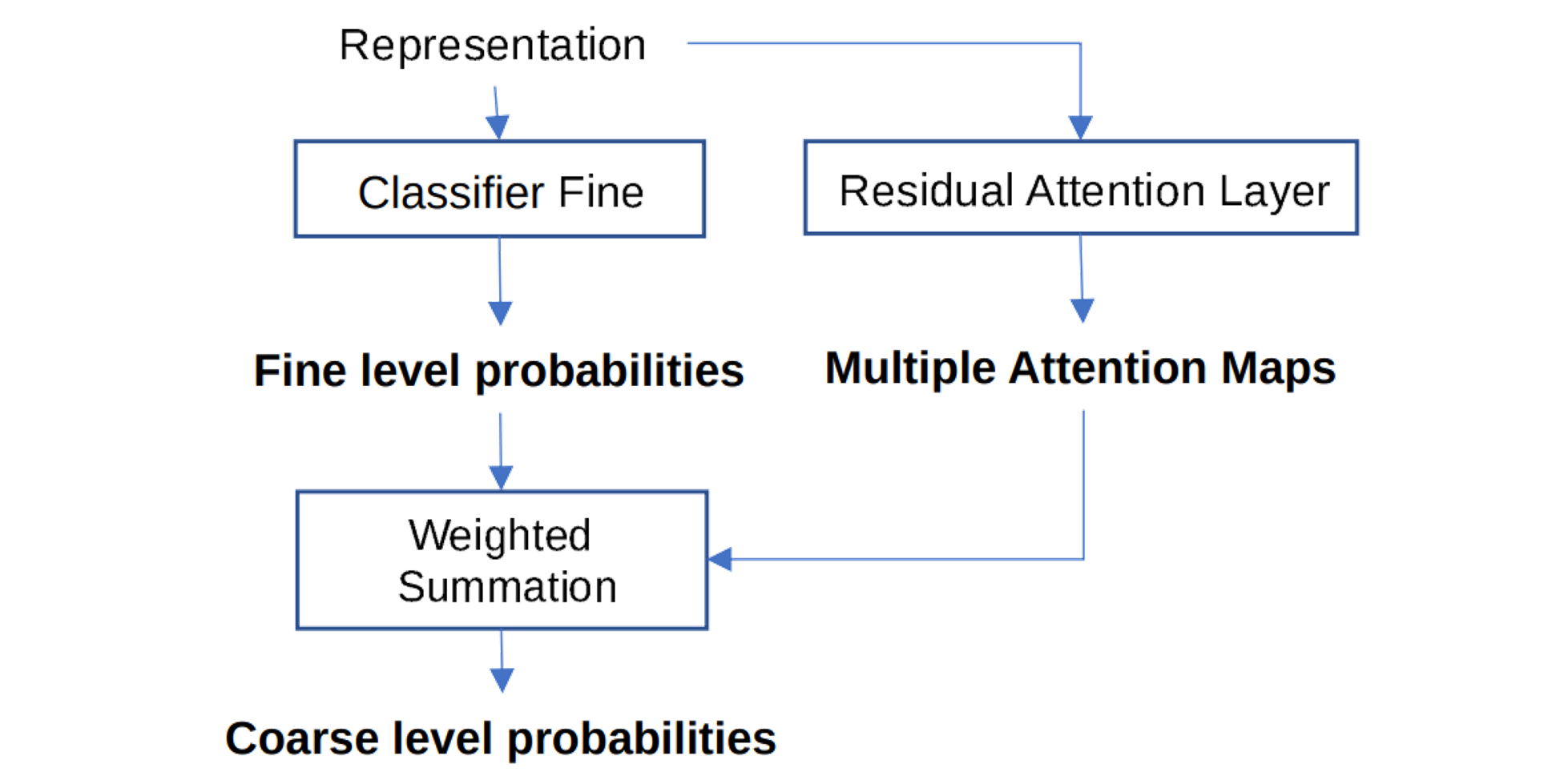

Automatic Music Tagging

[arXiv]

An Attention-based Approach To Hierarchical Multi-label Music Instrument Classification

Vocal Dereverberation

[arXiv] [demo]

Unsupervised Vocal Dereverberation with Diffusion-based Generative Models

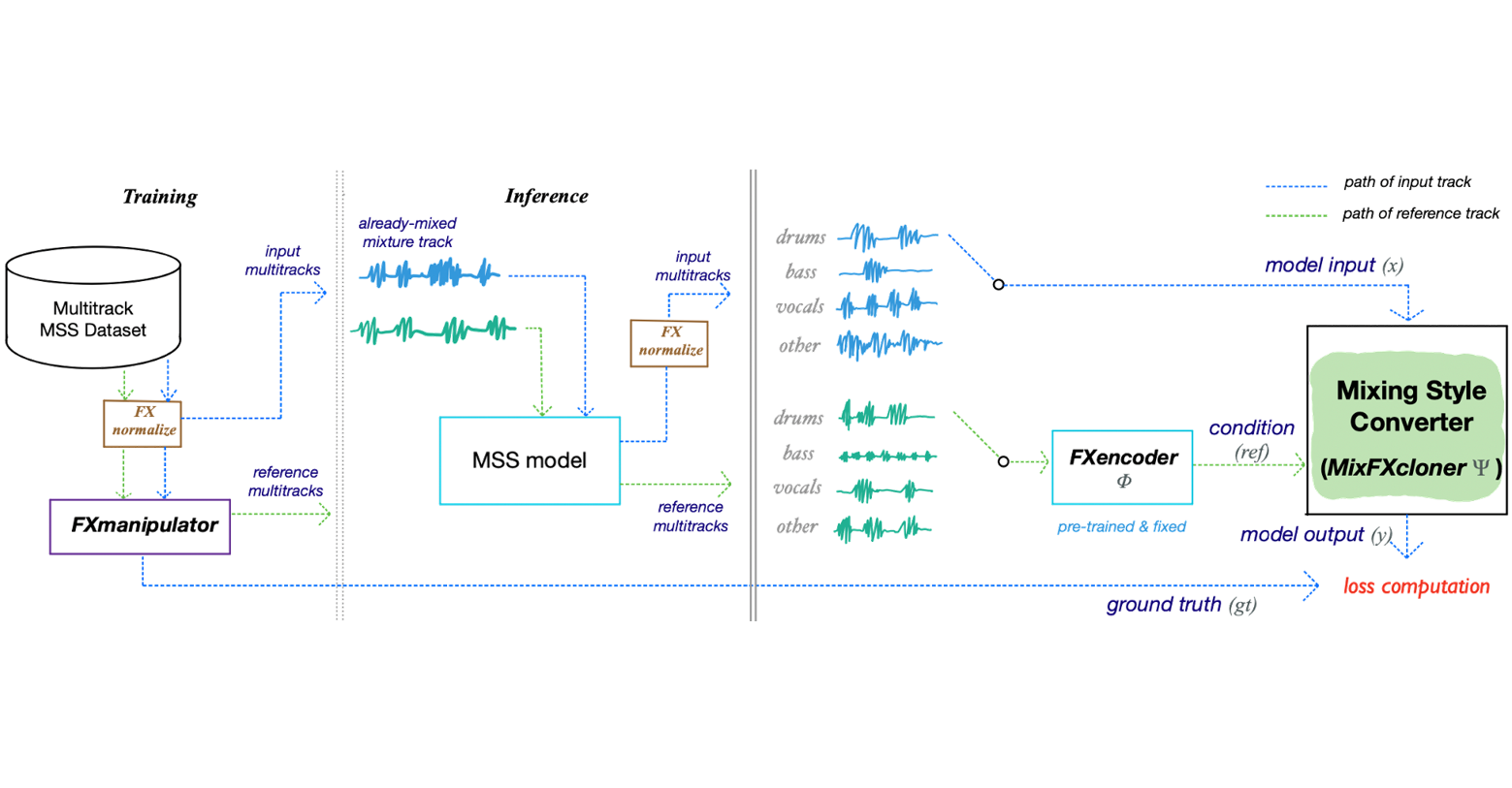

Mixing Style Transfer

[arXiv] [code] [demo]

Music Mixing Style Transfer: A Contrastive Learning Approach to Disentangle Audio Effects

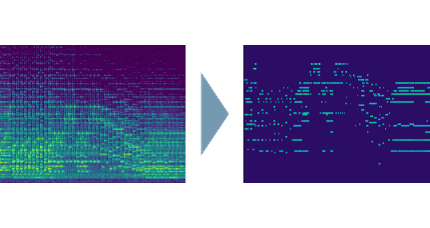

Music Transcription

[arXiv] [code] [demo]

DiffRoll: Diffusion-based Generative Music Transcription with Unsupervised Pretraining Capability

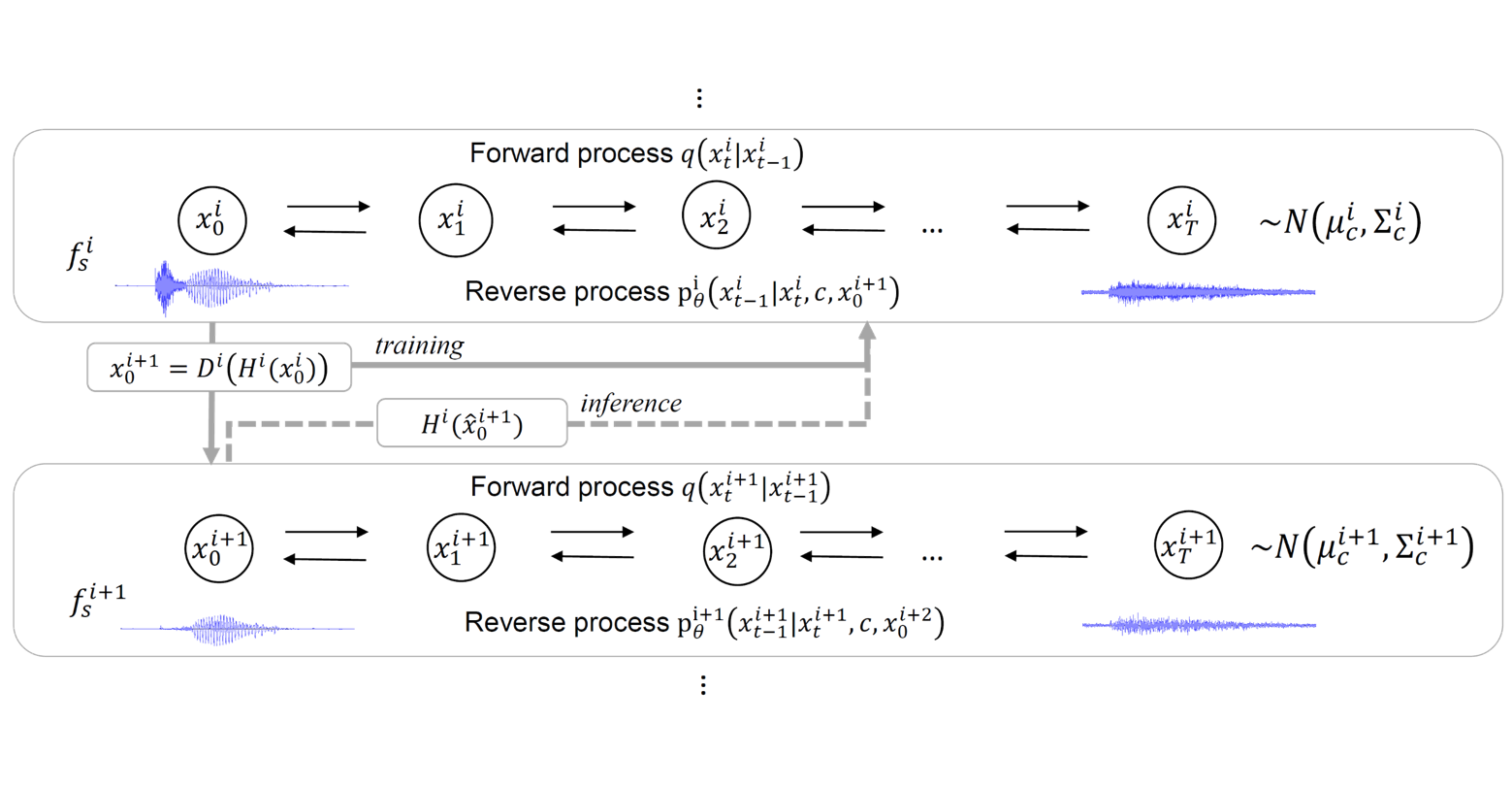

Singing Voice Vocoder

[arXiv] [demo]

Hierarchical Diffusion Models for Singing Voice Neural Vocoder

Distortion Effect Removal

[poster] [arXiv] [demo]

Distortion Audio Effects: Learning How to Recover the Clean Signal

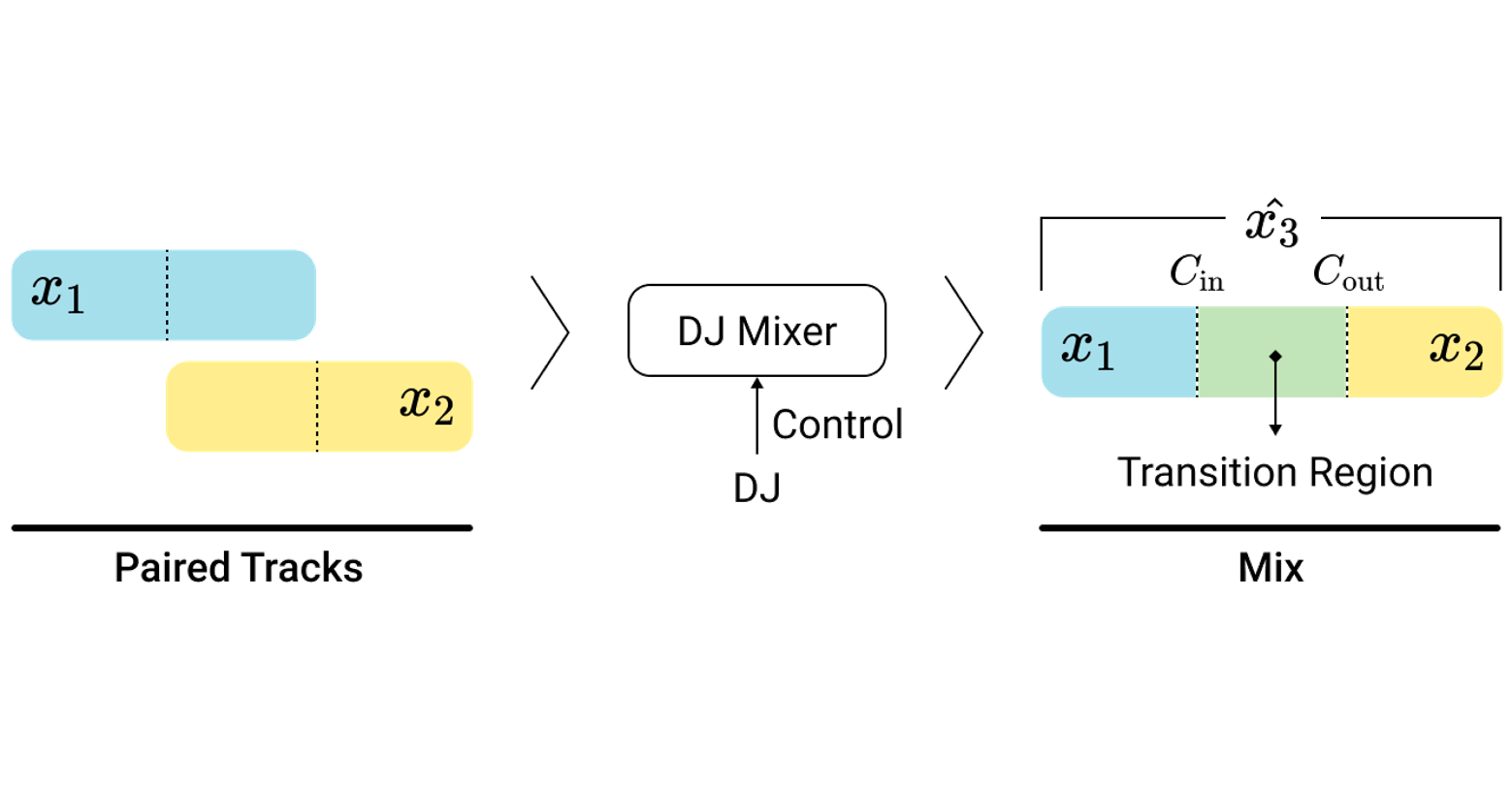

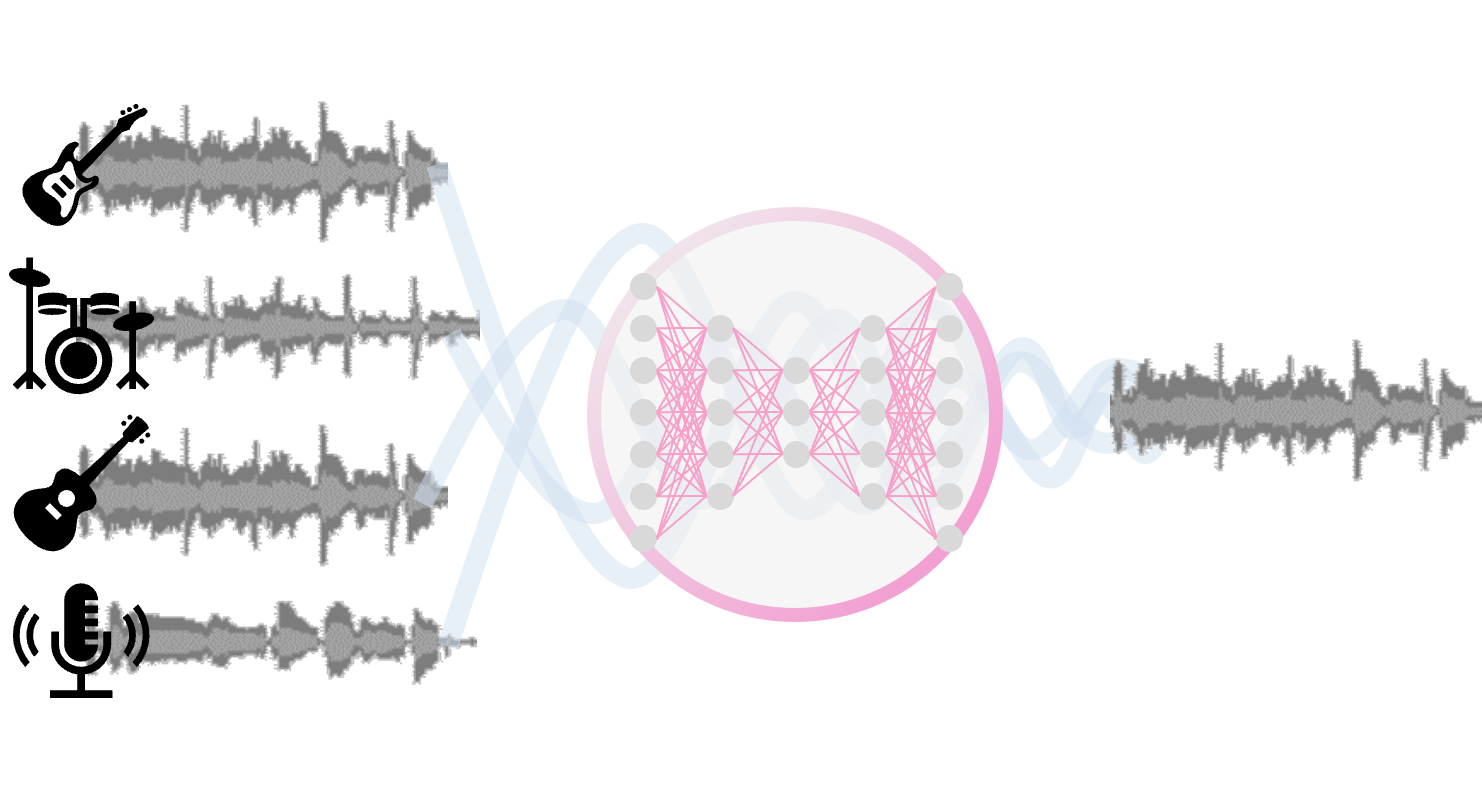

Automatic Music Mixing

[poster] [arXiv] [code] [demo]

Automatic Music Mixing with Deep Learning and Out-of-Domain Data

Cinematic Technologies

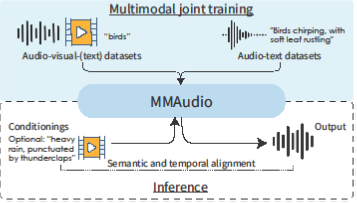

MMAudio

[arXiv] [code] [demo]

MMAudio: Taming Multimodal Joint Training for High-Quality Video-to-Audio Synthesis

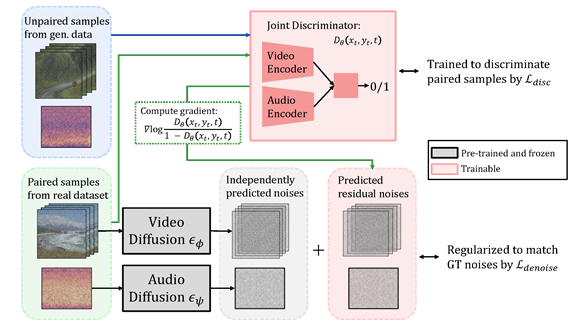

MMDisCo

[OpenReview] [arXiv] [code]

MMDisCo: Multi-Modal Discriminator-Guided Cooperative Diffusion for Joint Audio and Video Generation

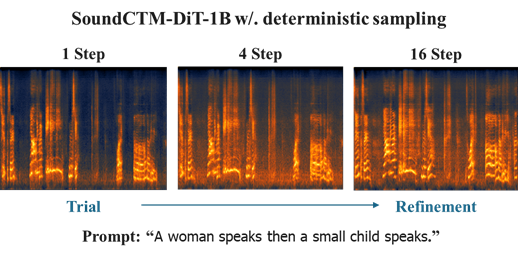

SoundCTM

[OpenReview] [arXiv] [code] [demo]

SoundCTM: Unifying Score-based and Consistency Models for Full-band Text-to-Sound Generation

Mining Your Own Secrets

[OpenReview] [arXiv]

Mining Your Own Secrets: Diffusion Classifier Scores for Continual Personalization of Text-to-Image Diffusion Models

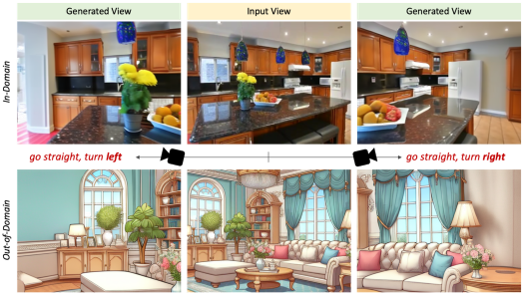

GenWarp

[arXiv] [demo]

GenWarp: Single Image to Novel Views with Semantic-Preserving Generative Warping

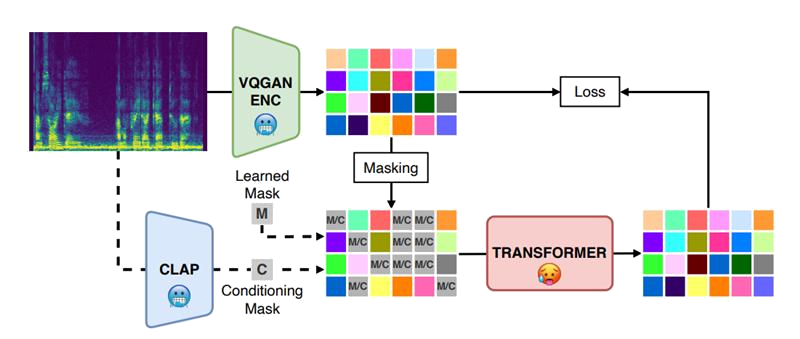

SpecMaskGIT

[arXiv] [demo]

SpecMaskGIT: Masked Generative Modeling of Audio Spectrograms for Efficient Audio Synthesis and Beyond

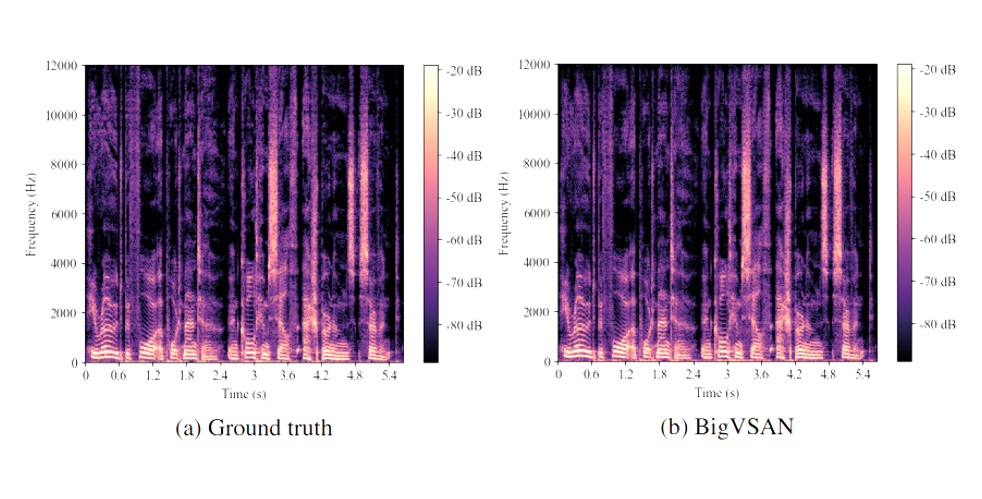

BigVSAN Vocoder

[arXiv] [code] [demo]

BigVSAN: Enhancing GAN-based Neural Vocoders with Slicing Adversarial Network

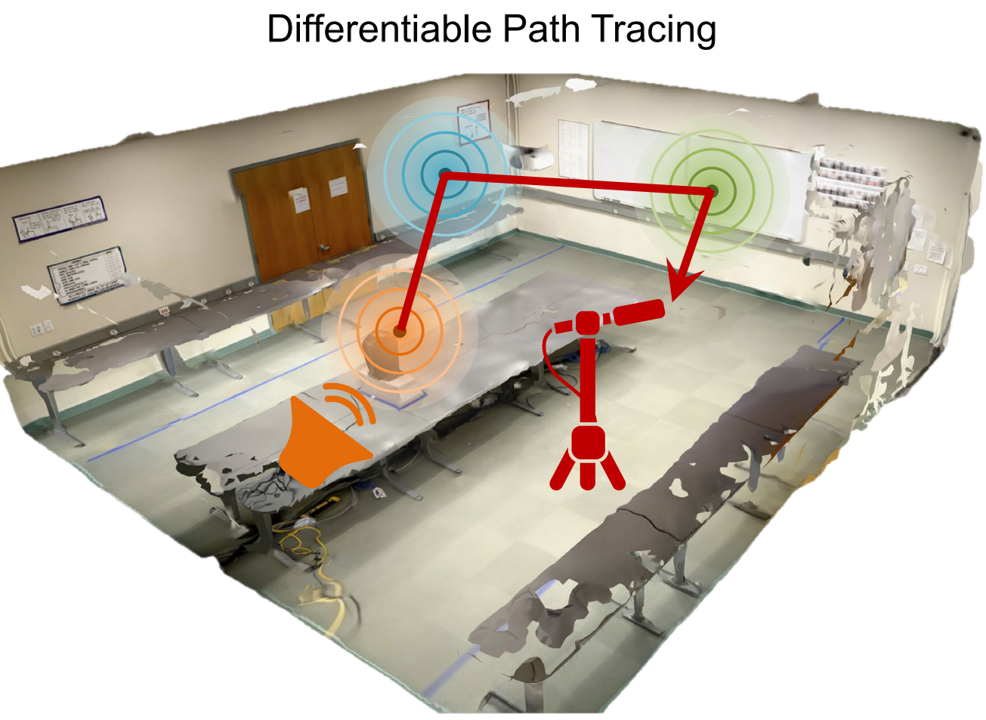

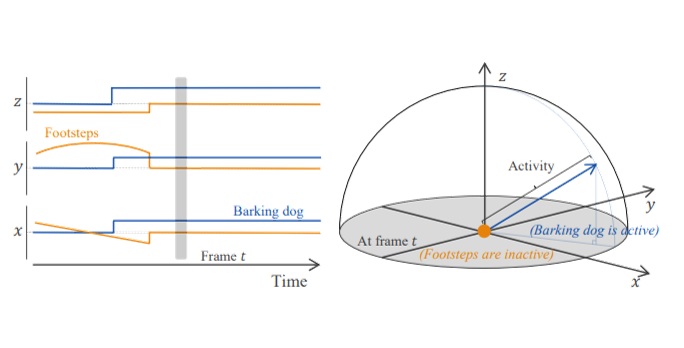

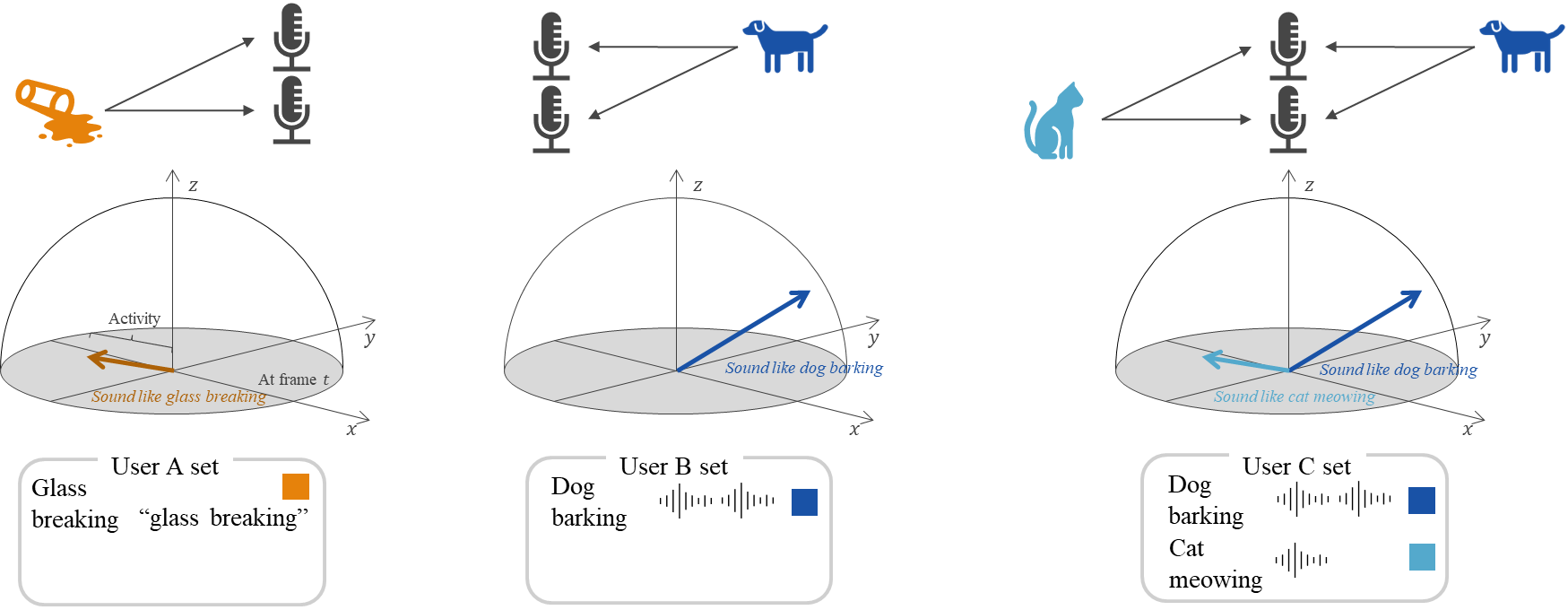

Zero-/Few-shot SELD

[IEEE] [arXiv]

Zero- and Few-shot Sound Event Localization and Detection

STARSS23

[arXiv] [dataset]

STARSS23: An Audio-Visual Dataset of Spatial Recordings of Real Scenes with Spatiotemporal Annotations of Sound Events

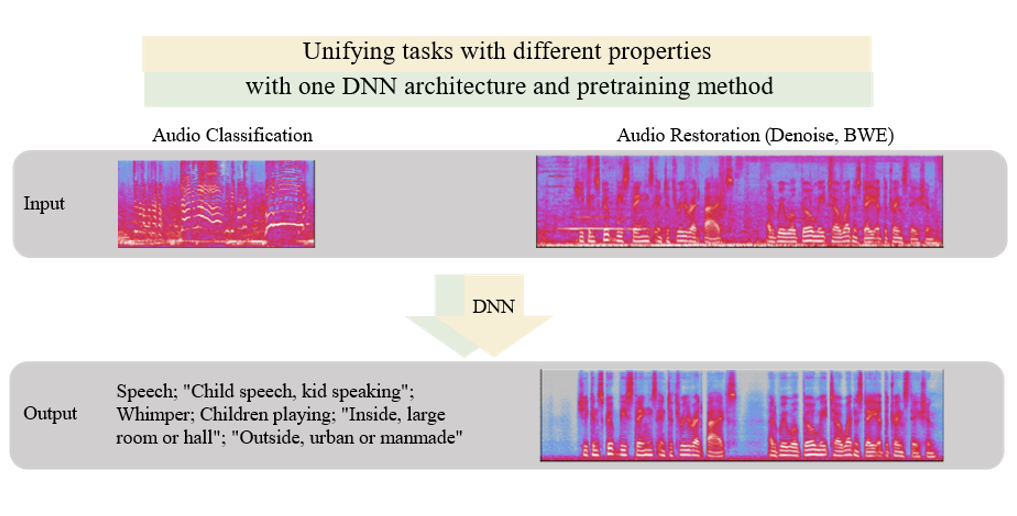

Audio Restoration: ViT-AE

[IEEE] [arXiv] [demo]

Extending Audio Masked Autoencoders Toward Audio Restoration

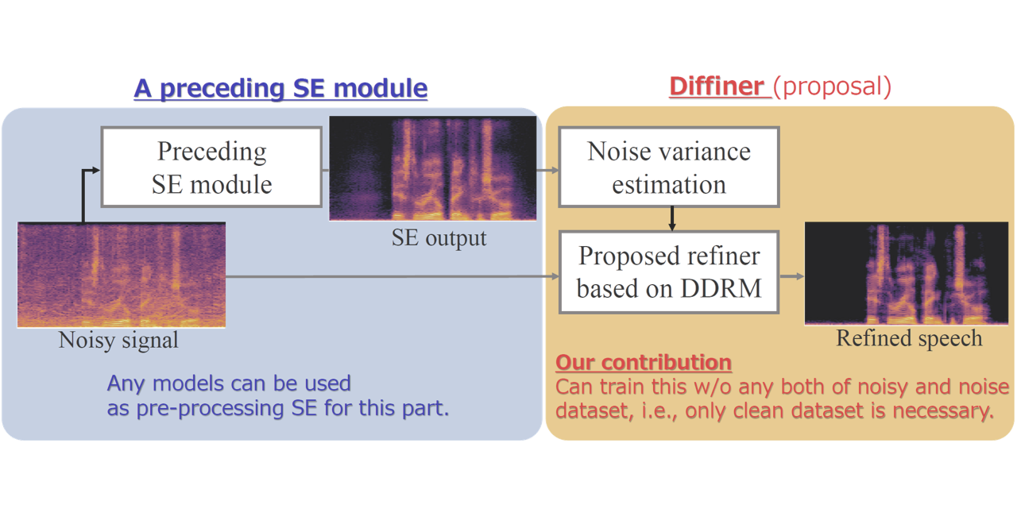

Diffiner

[ISCA] [arXiv] [code]

Diffiner: A Versatile Diffusion-based Generative Refiner for Speech Enhancement

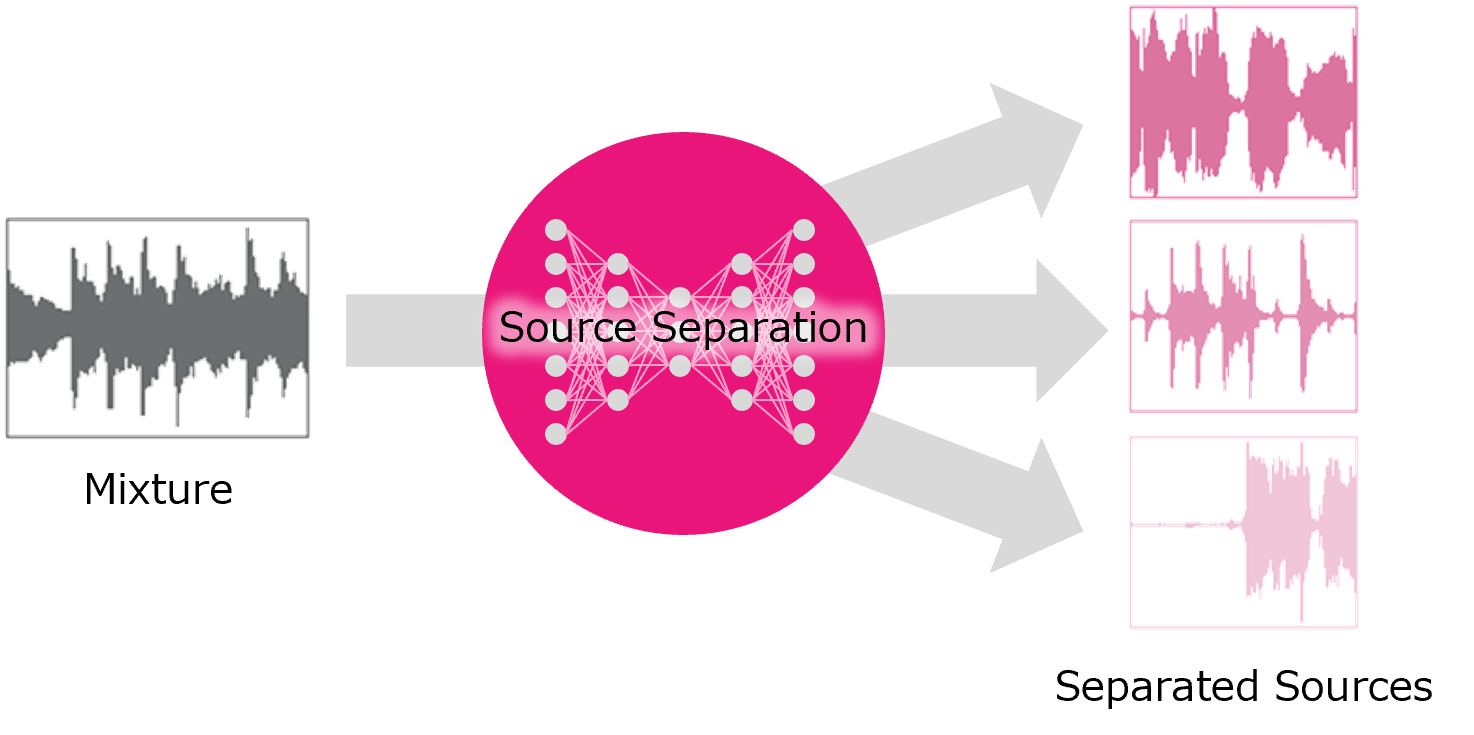

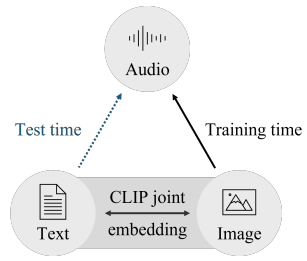

CLIPSep

[OpenReview] [arXiv] [code] [demo]

CLIPSep: Learning Text-queried Sound Separation with Noisy Unlabeled Videos

Hosted Challenges

DCASE Challenge Task 3

[DCASE Challenge2023]

Sound Event Localization and Detection Evaluated in Real Spatial Sound Scenes